How would a mismatch between the user query language and the source document language affect RAG? I wanted to find out, so I built a multilingual RAG chatbot.

Table of Contents

Multilingual Data

As the datasource, I used the Japanese-English Software Manual Parallel Corpus. It contains over 400,000 parallel sentences in English and Japanese. However, for my test, I used only the subset from the PostgreSQL documentation (23,000 sentences). After joining the individual sentences into documents, I ended up with 1730 documents.

I think the crucial part of the data is that the sentences are parallel, they are used in the same context, convey the same meaning, and are translated from each other. This isn’t Wikipedia, where articles on the same topic in different languages are written separately using localized sources. As a consequence, semantic search can correctly identify equivalent documents across languages because those documents have the same meaning.

Multilingual RAG

I have created a single collection (index) of documents in the Pinecone vector database. The collection contains two records for each source file, one in Japanese and one in English. As shown below, I have also added a language field to the documents as metadata.

documents.push({

id: name + "_jp",

values: jpText,

source: file,

name: name,

language: 'japanese'

});

documents.push({

id: name + "_en",

values: enText,

source: file,

name: name,

language: 'english'

});

While retrieving the documents, I pass the language selected by the user as a metadata filter. The query is always in English. Additionally, the AI model is designed to respond in English, regardless of the language of the retrieved context.

const results = await namespace.searchRecords({

query: {

inputs: { text: query },

topK: 1,

filter: {

language: languageName

}

},

fields: ['values', 'name'],

});

To test the pipeline, I use basic semantic search. I pass the user query to the RAG pipeline verbatim and retrieve the most similar document. A query transformation could improve the results, but it would also make it impossible to compare the results between languages.

Results

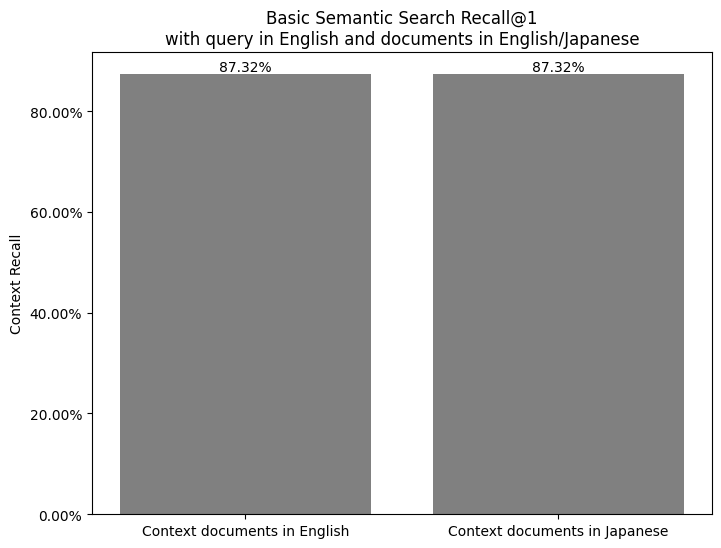

While running an evaluation in Ragas, I have noticed that the basic semantic search returns the correct document 87% of the time, and that it doesn’t matter if the retrieved document is in Japanese or English. Data sources were interchangeable. The incorrectly retrieved documents also showed the same pattern. The RAG was making the same mistakes, no matter the language selected by the user.

Conclusions

What does this mean in practice? First, you’re free to use documents in multiple languages as your source of truth for RAG (as long as your embedding model supports it). There is no need to stick to just one. Second, a single RAG pipeline can serve users across languages without extra complexity. And finally, you can confidently mix languages in your context database, knowing the system will still retrieve the correct information (but instruct the model that generates the final answer to translate it to the user’s language).

However, if you create an index that is too large, you will encounter problems with Approximate Nearest Neighbors (ANN) search. Therefore, if you filter by metadata, consider splitting your indexes.