What if you could sniff out AI hallucinations without building a custom test set or babysitting outputs? That’s the promise of UQLM (Uncertainty Quantification for Language Models), a clever little library that brings uncertainty quantification to LLMs.

Table of Contents

It can even work live while generating the responses in production (if you are ok with the performance degradation). In this article, I show how to use the UQLM library, explain the metrics it calculates, and tell you when you should and shouldn’t use it.

The library implements a few scorers (based on this research paper) that run the given prompts and determine how likely the responses were hallucinated. The score 1 means the outputs were not hallucinated (according to the scorer), and the score zero means everything was a hallucination.

Compatible AI clients

Unfortunately, not every AI API client is compatible with UQLM. Not only because some scorers need access to token probabilities, but also because the UQLM API itself works only with classes that extend BaseChatModel from Langchain. Langchain has tons of integrations, so this doesn’t look like a big issue, but during my testing (with UQLM v0.2), I couldn’t make it work with HuggingFace models. The classes seem compatible, but the code crashes at runtime.

Hence, the first thing we do is import and instantiate the OpenAI client:

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(

model="gpt-4o-mini",

api_key=os.getenv("OPENAI_API_KEY")

)

Next, we need a list of prompts we want to test (or those may be your production prompts if you use the library in a live pipeline). I prepared the prompts loved by LinkedIn Luddites:

prompts = [

"How many r's are in strawberry?",

"How many r's are in \"rabarbar\"?",

"How many b's are in semantic?"

]

We will see what happens when we run the prompt through the LLM and each of the scorers.

Black-Box Scorer

import torch

from uqlm import BlackBoxUQ

bbuq = BlackBoxUQ(llm=llm, scorers=["semantic_negentropy"], use_best=True, device=torch.device("cuda"), verbose=True)

results = await bbuq.generate_and_score(prompts=prompts, num_responses=5)

results.to_df()

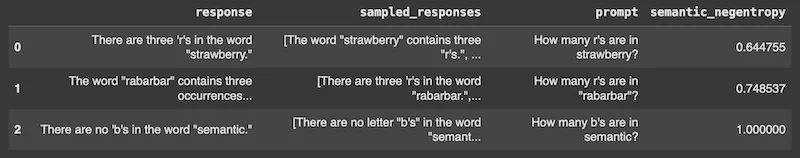

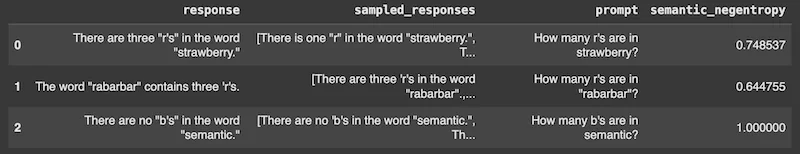

The BlackBox scorer sends the prompt to the model n+1 times. The first time, it generates what’s called an “original response”. In subsequent runs, it generates “candidate responses.” The action taken then depends on the scorer. When we use Normalized Semantic Negentropy, all responses are treated the same way, and the scorer calculates the entropy between pairs of responses. If we set use_best=True, the scorer will find the response that minimizes the uncertainty and return this as the most likely non-hallucinated response. You can use this as a way to implement the self-consistency prompt technique.

Of course, the results depend on token sampling, and running the scorer twice for the same prompts gives us different results. Hence, the scorer isn’t deterministic, so I wouldn’t use it in CI/CD pipelines. Unless you replace the generate_and_score method with score. In this case, you have to pass the AI responses as a list of strings (and a list of lists of strings), and the library will only calculate the score without generating new responses.

White-Box Scorer

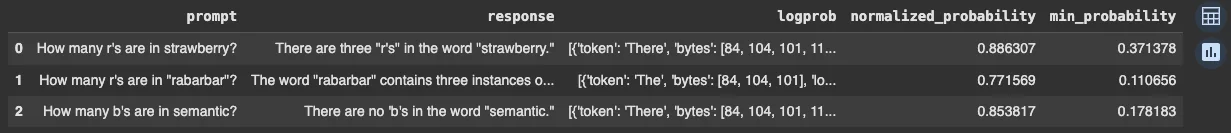

The white-box scorer needs access to the probabilities of the selected tokens. Not every LLM API client provides such information, so you need to check the documentation of the model you are using. The white-box scorer provides us with the normalized probability of the selected tokens and the minimum probability among all tokens.

The assumption is that the more likely the token is, the less likely it is to be hallucinated. Sadly, it’s a wrong assumption. If this were true, we would always set the model’s temperature to 0 to get the most likely tokens. Unfortunately, temperature zero doesn’t guarantee non-hallucinated responses. We can still use the scorer to filter out responses with a large number of low-probability tokens.

from uqlm import WhiteBoxUQ

wbuq = WhiteBoxUQ(llm=llm)

results = await wbuq.generate_and_score(prompts=prompts)

results.to_df()

Other Scorers

In addition to the scorers above, they also offer a semantic scorer which, when working in the token-probability semantic entropy mode, combines the black-box and white-box scorers. They also have an LLM-as-a-judge scorer, which sends multiple calls to the model to determine answer correctness and then averages the results. You can configure each scorer with several scoring methods, all of which are documented with references to the research papers in which they were introduced.

Summary

It’s good to know UQLM because it’s yet another tool you can use to detect AI hallucinations. Sometimes. When you are lucky. Or rather, unlucky and get horrible cases of hallucinations. I wouldn’t put this tool as a default component of your pipeline. Yet. However, it may evolve into a standard tool in the future (remember that I wrote this text when they released version 0.2). If you are reading this 20 versions later, the future when this is a standard tool may already be here.