Update: This article has been updated in November 2023 to include changes in the OpenAI SDK version 1.1.1+ and new features announced during OpenAI DevDay 2023.

Table of Contents

- How Does Function Calling Work in ChatGPT?

- How to Define Functions for ChatGPT?

- How to Call a Function from ChatGPT?

- How to Build a Slack Chatbot?

- How to Make The Chatbot Production-Ready?

- How to Write Functions For AI?

- How to Debug AI Interactions

Since the release of versions gpt-4-0613 and gpt-3.5-turbo-0613, the ChatGPT Completion API can interact with user-defined functions. The new feature allows us to provide AI tools without the need for additional libraries such as Langchain. For a detailed comparison, see my article on the difference between Langchain Agents and OpenAI Functions.

In this article, I will demonstrate how to create an AI-powered chatbot for Slack and provide AI access to a REST API. We will simulate the API of an HR system using the regres.in mock API.

How Does Function Calling Work in ChatGPT?

Before we begin, let’s clarify one thing: AI doesn’t directly call any functions. Instead, ChatGPT generates a response containing information about the function it would like to call and the function’s arguments. It’s up to us to call the function on its behalf in our code and send back a message with the function result.

How to Define Functions for ChatGPT?

The new OpenAI Completion API accepts two additional parameters: tools and tool_choice.

The tools parameter contains a list of dictionaries describing the available functions. Each function needs a name, a description explaining what it does, and a parameters dictionary. The parameters dictionary informs the AI about the argument names and types. Additionally, the function definition may specify which arguments are required or what values are allowed.

The tool_choice parameter determines whether ChatGPT can call a function from the provided list. Setting the parameter to auto allows AI to call any function. On the other hand, setting the tool_choice value to none turns off the function calling by ChatGPT. We can use the tool_choice parameter to toggle the function-calling feature.

In my example, I want ChatGPT to use a REST API. Therefore, I provide a generic call_rest_api function that can send any HTTP request to any URL with an optional JSON body.

self.functions = [

{

"type": "function",

"function": {

"name": "call_rest_api",

"description": "Sends a request to the REST API",

"parameters": {

"type": "object",

"properties": {

"method": {

"type": "string",

"description": "The HTTP method to be used",

"enum": ["GET", "POST", "PUT", "DELETE"],

},

"url": {

"type": "string",

"description": "The URL of the endpoint. Value placeholders must be replaced with actual values.",

},

"body": {

"type": "string",

"description": "A string representation of the JSON that should be sent as the request body.",

},

},

"required": ["method", "url"],

},

}

}

]

As I want AI to interact with a REST API, I need to define the available endpoints and their functionalities. Instead of describing the functions in a single string variable, I prefer using dictionaries to keep the code cleaner. Later, I can pass them as a JSON string.

self.available_apis = [

{'method': 'GET', 'url': '/api/users?page=[page_id]', 'description': 'Lists employees. The response is paginated. You may need to request more than one to get them all. For example,/api/users?page=2.'},

{'method': 'GET', 'url': '/api/users/[user_id]', 'description': 'Returns information about the employee identified by the given id. For example,/api/users/2'},

{'method': 'POST', 'url': '/api/users', 'description': 'Creates a new employee profile. This function accepts JSON body containing two fields: name and job'},

{'method': 'PUT', 'url': '/api/users/[user_id]', 'description': 'Updates employee information. This function accepts JSON body containing two fields: name and job. The user_id in the URL must be a valid identifier of an existing employee.'},

{'method': 'DELETE', 'url': '/api/users/[user_id]', 'description': 'Removes the employee identified by the given id. Before you call this function, find the employee information and make sure the id is correct. Do NOT call this function if you didn\'t retrieve user info. Iterate over all pages until you find it or make sure it doesn\'t exist'}

]

Note that my instructions are pretty verbose. I want to ensure AI fully understands the available functionality and to prevent accidental or incorrect usage of the DELETE function.

Now, I must inform ChatGPT about the chatbot’s capabilities and expected behavior. My chatbot backend sends the following messages at the beginning of every conversation to set the context:

self.messages = [

{"role": "user", "content": "You are an HR helper who makes API calls on behalf of an HR representative"},

{"role": "user", "content": "You have access to the following APIs: " + json.dumps(self.available_apis)},

{"role": "user", "content": "If a function requires an identifier, list all employees first to find the proper value. You may need to list more than one page"},

{"role": "user", "content": "If you were asked to create, update, or delete a user, perform the action and reply with a confirmation telling what you have done."}

]

In the next step, I will implement the call_rest_api function. AI will generate a JSON string with the arguments, so my function must parse and handle those arguments properly. Also, it’s crucial for the function always to return a string response. Otherwise, ChatGPT won’t understand what happened. ChatGPT can comprehend HTTP status codes, so returning information about the status code is sufficient if the request doesn’t produce any data.

def call_rest_api(self, arguments):

arguments = json.loads(arguments)

url = 'https://reqres.in' + arguments['url']

body = arguments.get('body', {})

response = None

if arguments['method'] == 'GET':

response = requests.get(url)

elif arguments['method'] == 'POST':

response = requests.post(url, json=body)

elif arguments['method'] == 'PUT':

response = requests.put(url, json=body)

elif arguments['method'] == 'DELETE':

response = requests.delete(url)

else:

raise ValueError(arguments)

if response.status_code == 200:

return json.dumps(response.json())

else:

return f"Status code: {response.status_code}"

How to Call a Function from ChatGPT?

When sending a user’s message to ChatGPT, we include the list of available functions:

def call_ai(self, new_message):

if new_message:

self.messages.append({"role": "user", "content": new_message})

response = self.client.chat.completions.create(

model="gpt-3.5-turbo-1106",

messages=self.messages,

tools=self.functions,

tool_choice="auto",

)

...

Now, let’s focus on an important part. We want our code to:

- Call the functions requested by AI. It may be more than one function!

- Pass the response back to AI.

- Allow AI to call multiple functions in a row and wait until ChatGPT returns a response intended for the user.

- Only return the last message to the user, excluding functions, their parameters, and responses.

In the call_ai function, we need to handle the responses from ChatGPT and distinguish between messages intended for the user and function calls. Any messages with a non-empty tool_calls field indicate a function call. Remember that AI may request multiple function calls in a single response! tool_calls is a list!

When we receive such requests, we need to call the functions with the provided arguments and pass the response back to ChatGPT as a message with role set to tool, the function name, and the tool call identifier. We store the function response as the content of the message.

# This is a continuation of the call_ai function!

msg = response.choices[0].message

self.messages.append(msg)

if msg.content:

logger.debug(msg.content)

tool_calls = msg.tool_calls

if tool_calls:

msg.content = "" # required due to a bug in the SDK. We cannot send a message with None content

for tool_call in tool_calls:

# we may get a request to call more than one function(!)

function_name = tool_call.function.name

function_args = tool_call.function.arguments

if function_name == 'call_rest_api':

# ['function_call']['arguments'] contains the arguments of the function

logger.debug(function_args)

# Here, we call the requested function and get a response as a string

function_response = self.call_rest_api(function_args)

logger.debug(function_response)

# We add the response to messages

self.messages.append({

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": function_response

})

self.call_ai(new_message=None) # pass the function response back to AI

Since we recursively call call_ai, the function won’t exit until we receive a message intended for the user. Recursion allows AI to chain multiple function interactions in a row because we don’t exit the call_ai function too quickly.

The code is stateful and contains the entire chat history of a conversation. Instead of returning the last message from call_ai, we can add a get_last_message function to retrieve the response for the user:

def get_last_message(self):

return self.messages[-1].content

I encapsulated all of the above code in an AI class. Note I had to instantiate the API Client, too! The class structure looks like this:

import json

import logging

from openai import OpenAI

import requests

logger = logging.getLogger(__name__)

class AI:

def __init__(self):

self.available_apis = ...

self.functions = ...

self.messages = ...

self.client = OpenAI(

api_key=API_KEY

)

def call_rest_api(self, arguments):

...

def call_ai(self, new_message):

...

def get_last_message(self):

...

How to Build a Slack Chatbot?

Once we have completed the code implementing AI interactions, we can move on to connecting our service with Slack. The service should listen to events from Slack and pass the messages to AI.

I have provided detailed instructions for setting up a Slack app in my article on building an AI-powered Slackbot for data analysis, so here, I will focus on the code implementation.

The Slackbot will respond to messages in a specific channel. Additionally, the service will only perform actions when the bot is mentioned in a message. We will use the app_mention event to detect such messages.

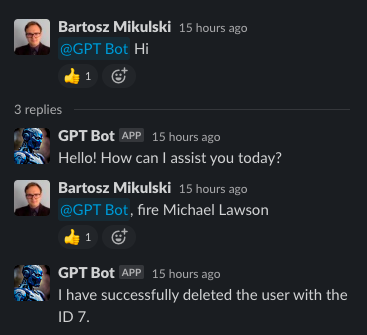

Since calling ChatGPT may take some time, we will handle requests in a separate thread. To acknowledge that we have received a message, the chatbot will react to all messages with a thumbs-up emoji. Later, the service will reply in the same Slack thread.

Take a look a the code I used to store the conversations:

conversations = {}

...

ai = conversations.get(thread_ts, AI())

conversations[thread_ts] = ai

I have stored them in a dictionary, using the thread_ts as the key. This way, we can handle multiple conversations simultaneously and maintain the chat history for each of them. However, I have not removed the conversations from the dictionary, so the dictionary will continue to grow until it reaches the memory limit. In a production environment, we should implement a mechanism to remove old conversations.

If the Slack channel is public and you don’t want multiple people talking with the bot in the same thread, identify conversations using both the thread and the user id.

import slack

from flask import Flask

from slackeventsapi import SlackEventAdapter

from ai import AI

from threading import Thread

from queue import Queue, Full

SLACK_CHANNEL = "#slack-bots"

SLACK_TOKEN = ... # Preferably, pass the token as an environment variable or read from a file

SLACK_SIGNING_TOKEN = ...

app = Flask(__name__)

slack_event_adapter = SlackEventAdapter(SLACK_SIGNING_TOKEN, '/slack/events', app)

client = slack.WebClient(token=SLACK_TOKEN)

messages_to_handle = Queue(maxsize=32)

conversations = {}

def reply_to_slack(thread_ts, response):

client.chat_postMessage(channel=SLACK_CHANNEL, text=response, thread_ts=thread_ts)

def confirm_message_received(channel, thread_ts):

client.reactions_add(

channel=channel,

name="thumbsup",

timestamp=thread_ts

)

def handle_message():

while True:

message_id, thread_ts, user_id, text = messages_to_handle.get()

print(f'Handling message {message_id} with text {text}')

text = " ".join(text.split(" ", 1)[1:])

try:

ai = conversations.get(thread_ts, AI())

conversations[thread_ts] = ai

ai.call_ai(text)

response = ai.get_last_message()

reply_to_slack(thread_ts, response)

except Exception as e:

response = f":exclamation::exclamation::exclamation: Error: {e}"

reply_to_slack(thread_ts, response)

import traceback

traceback.print_exc()

finally:

messages_to_handle.task_done()

@slack_event_adapter.on('app_mention')

def message(payload):

print(payload)

event = payload.get('event', {})

message_id = event.get('client_msg_id')

thread_ts = event.get('ts')

channel = event.get('channel')

user_id = event.get('user')

text = event.get('text')

try:

messages_to_handle.put_nowait((message_id, thread_ts, user_id, text))

confirm_message_received(channel, thread_ts)

except Full:

response = f":exclamation::exclamation::exclamation:Error: Too many requests"

reply_to_slack(thread_ts, response)

except Exception as e:

response = f":exclamation::exclamation::exclamation: Error: {e}"

reply_to_slack(thread_ts, response)

print(e)

if __name__ == "__main__":

Thread(target=handle_message, daemon=True).start()

app.run(debug=True)

How to Make The Chatbot Production-Ready?

The above code serves as a starting point, but it should not be deployed in a production environment as is. Let’s discuss the considerations and improvements we must be implemented before deployment.

Action Confirmations

The code does not include checks to confirm whether the user wants to execute the function suggested by AI. While it may be acceptable for retrieving data, the user should approve modifications or deletions. However, confirmations should not be part of the AI interaction!

We could instruct ChatGPT to ask for user confirmation before calling a function, but there is a risk that AI may ignore the instruction. AI might assume that if the user requested an action, it is already confirmed.

Instead, I suggest adding a confirmation step within the function code. For example, we could send a Slack message to the user with a confirmation link or send the link via email.

Confirmations must be independent of AI interactions! Do not return the confirmation link to AI, so ChatGPT cannot trigger the confirmation itself. Instead, send the confirmation request directly to humans.

In most cases, ChatGPT will not attempt to confirm the action (or we can filter out such confirmations in our function). Using AI to request confirmation of an action that AI wants to perform is as illogical as sending an encrypted attachment to someone and including the password in a separate email sent to the same inbox. Never do it.

Storing the Chat History

In the provided code, the chat history is stored in an in-memory dictionary, and no messages are ever removed. Keeping a record forever is a terrible idea, as the dictionary will grow indefinitely until it exceeds the memory limit. In a production environment, store the chat history in a database or implement a mechanism to remove old conversations.

User Authentication

There should be a mechanism to distinguish between users. While it is possible to map Slack user IDs to users in our system, this approach may have limitations. Instead, the service should use OAuth for user authentication. This way, we can assign short-lived tokens to users and revoke them as needed.

The service code should perform authentication before sending anything to AI, and AI should not be involved in the authentication process.

How to Write Functions For AI?

Limit the Number of Available Functions

Providing too many options can confuse ChatGPT. Additionally, function descriptions consume tokens, and every token incurs a cost. Therefore, it is important to offer access to only necessary functions and avoid redundancy.

Compose Functions

AI performs better when it can call a single function instead of chaining multiple function interactions. If an action requires calls to various functions, I recommend combining them into a single function and allowing AI to call that grouping function instead.

Accept Various Input Formats

In the example code, an employee ID is required. However, this approach may not be ideal. AI needs to determine how to find the user by name and then pass the appropriate ID to the function. Instead, it would be more flexible to create a function that accepts both the employee ID and name. If the search matches multiple employees, REST API should return an error, prompting the AI to provide a specific employee ID.

Validate Input

Never assume that function arguments generated by the software are correct. Treat all data received from AI as potentially incorrect and validate everything. ChatGPT can make errors just like humans do.

Fail Gracefully and Return Helpful Error Messages

ChatGPT can correct function arguments if the error message explains what went wrong. If a specific interaction consistently fails, it is a sign that the prompt or function descriptions need improvement.

How to Debug AI Interactions

Log everything

Record all messages sent and received from AI. In production systems, I recommend using the structlog library, as it allows for adding context to log messages and facilitates parsing them later.

Look for Repeating Errors in the Same Context

If AI consistently makes the same error when presented with similar inquiries, it indicates a need to improve the provided instructions.

For instance, in the provided code, there is no function available to search for a user by name. Instead, ChatGPT must iterate through pages containing all employees. If this interaction repeatedly fails, we would need to implement a function specifically designed to search for users by their names.

Go From AI Janitor to AI Architect

Stop debugging unpredictable AI systems. I can help you build, measure, and deploy reliable, production-grade AI applications that don't hallucinate.

Message me on LinkedIn