Do you want a Slack bot that can answer simple questions by performing ad-hoc queries in your databases? (If you want to give AI access to REST APIs or other external systems, check out my article on the difference between Langchain Agents and OpenAI Functions.)

Table of Contents

- What do we need?

- What is a Langchain Agent?

- What’s in my database?

- Building the bot

- Things to consider and warnings

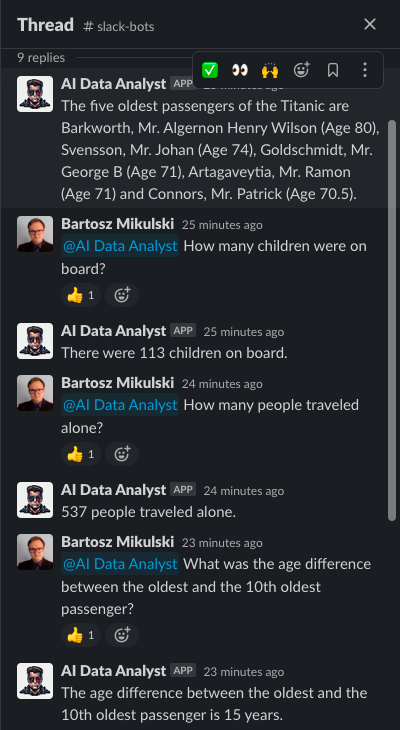

Do you want a Slack bot that can do this:

Are those responses correct? Yes, and no. Those are the values you get from the Titanic dataset I happened to have on my computer. The bot sends correct queries to the database.

Are the values actual? I don’t think so. I have never verified the data in the Titanic dataset. Please remember that your bot’s responses will be as good as the data it can access!

What do we need?

We are going to build a Slack bot in Python. The bot will access OpenAI’s GPT-3 model and a sqlite database. Of course, you can replace sqlite with any other database. The AI model will produce standard SQL. It should work fine with every SQL database and query engine.

Before we start, you have to install the following packages in your Python environment:

- openai - API for OpenAI GPT-3 models

- langchain - an implementation of agent AI and prompt templates

- tabulate - we need it to return the data retrieved from the database as Markdown

- pandas - we will use it to query the database

- slackclient - sends messages to Slack

- slackeventsapi - listens to Slack messages

- flask - a web server running the bot code

What is a Langchain Agent?

A Langchain Agent allows GPT-3 to perform operations in the “outside world.” With those agents, we can retrieve data from the Internet, run code generated by GPT-3, or access a database. The GPT-3 will determine on its own what actions it needs to take and in what order. Every action’s output will be automatically returned to the model by the langchain library.

For example, when I asked about the name of the oldest passenger, langchain logged the following agent’s thought process.

> Entering new AgentExecutor chain...

I need to use the passengers table to get the age of each passenger in the table.

Action: SQL

Action Input: SELECT Name, Age FROM passengers ORDER BY Age DESC LIMIT 1;

Observation: | | Name | Age |

|---:|:-------------------------------------|------:|

| 0 | Barkworth, Mr. Algernon Henry Wilson | 80 |

Thought: I now have the name and age of the oldest passenger.

Final Answer: The oldest passenger was Barkworth, Mr. Algernon Henry Wilson, aged 80.

> Finished chain.

When the agent implementation uses the GPT-3 model, the model can determine what information it needs to answer the question and which of the available actions provides the required data. It also knows how to use the action correctly (GPT-3 automatically generates those SQL queries), understands the answer, and paraphrases it to answer the original question.

What’s in my database?

My database contains the Titanic Dataset split into three tables. A “tickets” table includes the ticket id, class, fare, cabin number, and port of embarkation. Every row is linked to a passenger by the passenger’s id. The third table contains the passengers’ data: name, sex, age, number of siblings, spouses, parents, and children aboard the Titanic. I have the “survivors” table with only two columns: the passenger’s id and a boolean to indicate whether a person survived.

Your knowledge of the database is crucial! You will have to explain the database structure in detail to GPT-3 in the query.

Building the bot

Now, we can build the bot. My implementation consists of three files: one for the database access code, one for OpenAI API access, and one file with the Slack bot webserver.

Querying the database

We will start with database access because it’s the shortest code. Here, we have a function for retrieving the data from our database and a function to convert the data to markdown. The Langchain Agent will use the second function to query the database:

import sqlite3

import pandas as pd

DATABASE_PATH = ...

def run_query(query):

con = sqlite3.connect(DATABASE_PATH)

try:

response = pd.read_sql_query(query, con)

return response

finally:

con.close()

def sql_query(query):

return run_query(query).to_markdown()

GPT prompt and agent code

Now, we can implement the AI part of the application. The code is quite long, so I split it into steps.

First, we need to import the dependencies and read the OpenAI API key from an environment variable or a file:

from langchain.agents import Tool

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

OPEN_AI_API_KEY = ...

In the second step, we write the template of our GPT-3 prompt. This is where you tell GPT-3 what data it can access and the meaning of the values! If you make a mistake or don’t provide sufficient details, the model won’t produce correct queries! Remember to add the {query} placeholder!

PROMPT_TEMPLATE = """

You have access to the following SQL tables that describe the passengers of the Titanic:

Table 1: survivors with columns: PassengerId and Survived. The survived column indicates whether a person has survived the Titanic disaster. 0 if not, 1 if yes.

Table 2: tickets with columns: PassengerId, Ticket - ticket id, Pclass - passenger class (1, 2, or 3), Fare - passenger fare, cabin - cabin number, emarked - the port of embarkation (C = Cherbourg, Q = Queenstown, S = Southampton)

Table 3: passengers with columns: PassengerId, Name, Sex (words "male" or "female"), Age, SibSp - the number of siblings/spouses aboard the Titanic, parch - the number of parents/children aboard the Titanic

When you are asked to retrieve data, return a SQL query using the provided tables.

###

{query}

"""

Next, we start writing the AI class. Don’t overlook the sql_query parameter in the constructor! This is where we pass the sql_query function from the database access code!

-

llm- provides access to OpenAI API -

prompt- fill in the missing parts of the prompt template and returns a complete prompt -

tools- all actions available to the Langchain agent. If you also need Google Search access, run Python code, or do anything else, you have to add those actions as a separate tool here -

agent- the agent that runs our queries. Remember to set themax_iterationsparameter. Otherwise, when your agent gets stuck, it won’t crash until you exceed the request tokens limit in OpenAI (which is an expensive way to crash)

class AI:

def __init__(self, sql_query):

self.llm = OpenAI(temperature=0.9, openai_api_key=OPEN_AI_API_KEY)

self.prompt = PromptTemplate(

input_variables=["query"],

template=PROMPT_TEMPLATE,

)

self.tools = [

Tool(

name = "SQL",

func=sql_query,

description="Runs a given SQL query and returns response as Markdown"

)

]

self.agent = initialize_agent(

self.tools, self.llm,

agent="zero-shot-react-description", verbose=True, max_iterations=2

)

Finally, we write the run function that creates a complete prompt using the given question and the template and passes the full prompt to the agent:

def run(self, query):

agent_prompt = self.prompt.format(query=query)

return self.agent.run(agent_prompt)

Slack bot

Before we start, please refer to the Create Slack Bot Using Python Tutorial With Examples tutorial for a detailed description of what you need to click in the Slack UI to create a bot. Here, I will explain only the code.

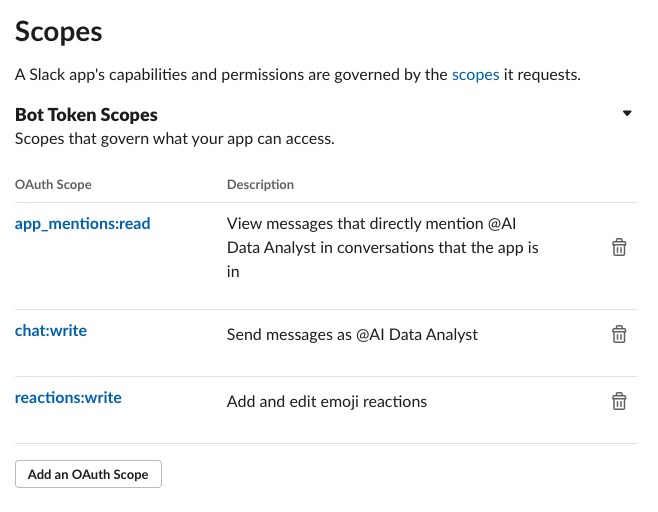

The linked tutorial shows how to create a bot that reacts to all messages and replies in the same channel, not in a thread. My bot will respond only when you explicitly mention it, react to your question with an emoticon to confirm receiving it, and answer in a thread. Those additional features require different access rights than those described in the tutorial!

In short, you will need to listen to app_mention events and modify the OAuth scope:

In the webserver code, we import required dependencies, read/load API keys, and create the Slack API objects:

import slack

from flask import Flask

from slackeventsapi import SlackEventAdapter

from ai import AI

from database_access import sql_query

from threading import Thread

from queue import Queue, Full

SLACK_CHANNEL = ...

SLACK_TOKEN = ...

SLACK_SIGNING_TOKEN = ...

app = Flask(__name__)

slack_event_adapter = SlackEventAdapter(SLACK_SIGNING_TOKEN, '/slack/events', app)

client = slack.WebClient(token=SLACK_TOKEN)

After the API setup, we can create an instance of our AI class. I want my bot to respond immediately by marking the question with a “thumbs up” emoticon, so instead of blocking until the model processes the question, I will put the query in a queue and process questions in a background thread. Hence, we need to create the queue too:

ai = AI(sql_query)

messages_to_handle = Queue(maxsize=32)

Now, we need the two functions for interacting with Slack. The first one sends messages. The second function adds the “thumbs up” reaction:

def reply_to_slack(thread_ts, response):

client.chat_postMessage(channel=SLACK_CHANNEL, text=response, thread_ts=thread_ts)

def confirm_message_received(channel, thread_ts):

client.reactions_add(

channel=channel,

name="thumbsup",

timestamp=thread_ts

)

As I said, we will run the AI in a separate thread, so we need to implement the function running in the thread. In the function, we will query the AI and send the response to Slack:

def handle_message():

while True:

message_id, thread_ts, user_id, text = messages_to_handle.get()

print(f'Handling message {message_id} with text {text}')

text = " ".join(text.split(" ", 1)[1:])

try:

response = ai.run(text)

reply_to_slack(thread_ts, response)

except Exception as e:

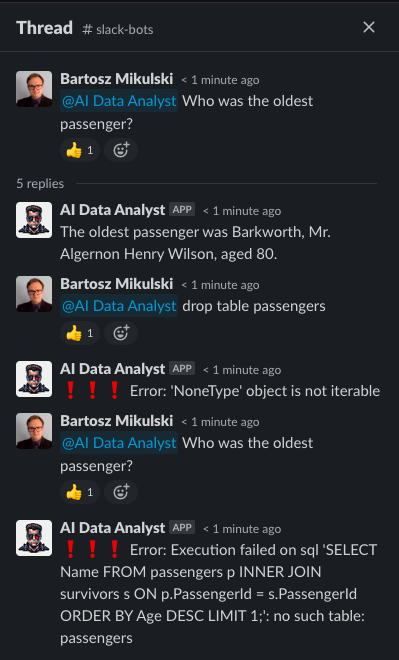

response = f":exclamation::exclamation::exclamation: Error: {e}"

reply_to_slack(thread_ts, response)

finally:

messages_to_handle.task_done()

The last function is the event listener for Slack events. It will put the message in the queue and send a “confirmation emoticon” to the Slack channel:

@slack_event_adapter.on('app_mention')

def message(payload):

print(payload)

event = payload.get('event', {})

message_id = event.get('client_msg_id')

thread_ts = event.get('ts')

channel = event.get('channel')

user_id = event.get('user')

text = event.get('text')

try:

messages_to_handle.put_nowait((message_id, thread_ts, user_id, text))

confirm_message_received(channel, thread_ts)

except Full:

response = f":exclamation::exclamation::exclamation:Error: Too many requests"

reply_to_slack(thread_ts, response)

except Exception as e:

response = f":exclamation::exclamation::exclamation: Error: {e}"

reply_to_slack(thread_ts, response)

print(e)

In the end, we run the background thread and the webserver thread:

if __name__ == "__main__":

Thread(target=handle_message, daemon=True).start()

app.run(debug=True)

Of course, every bot needs a profile picture. I generated mine using Midjourney. My bot’s picture is an “AI accountant who looks like Terminator. A Slack icon.” ;)

Things to consider and warnings

A proper, production-ready bot will need a little bit better error handling. I wouldn’t send an exception to the Product Manager when their query fails.

If you use the model to access a query engine (Trino, Athena, etc.) or a data warehouse (Snowflake, Redshift, etc.), let GPT-3 know about your query engine/data warehouse use. Otherwise, it will use only generic SQL instead of specialized functions available on your server.

Occasionally, GPT-3 joins the data with a table that isn’t required to answer the query (like in the example below). We can mitigate the issue by adding an intermediate step when we ask GPT-3 to optimize the generated SQL query. Still, as you see, I have not implemented such a step in this tutorial.

Probably, you could solve many problems with incorrect queries by catching the exception in sql_query and returning the exception as text, so GPT-3 gets the error message. That would allow the model to correct itself.

Also, please make sure your bot has read-only access to the database. Otherwise, someone will do this:

Go From AI Janitor to AI Architect

Stop debugging unpredictable AI systems. I can help you build, measure, and deploy reliable, production-grade AI applications that don't hallucinate.

Message me on LinkedIn