Leveraging artificial intelligence, especially the OpenAI API, brings forth a unique set of challenges. How do we monitor the application? How do we track the requests? How do you tell which requests cost us the most and why?

Table of Contents

- Setting up Langsmith and GPTBoost

- Tracking OpenAI Requests and Responses

- Feedback API

- Tracking OpenAI Function Calling

- Langsmith vs GPTBoost - which one should you use?

We need to meticulously track the prompts sent to the AI application to answer any of those questions. Additionally, we need to understand the current pricing. Quickly, the problem becomes a nightmare.

I will show you how to monitor AI applications, track costs, debug prompts, and monitor the usage of the functions API with two solutions: Langsmith — a service built by the creators of Langchain, and GPTBoost — a solution working as a proxy for the OpenAI API.

Setting up Langsmith and GPTBoost

I have already published an article about Langsmith, so take a look if you need help with the setup.

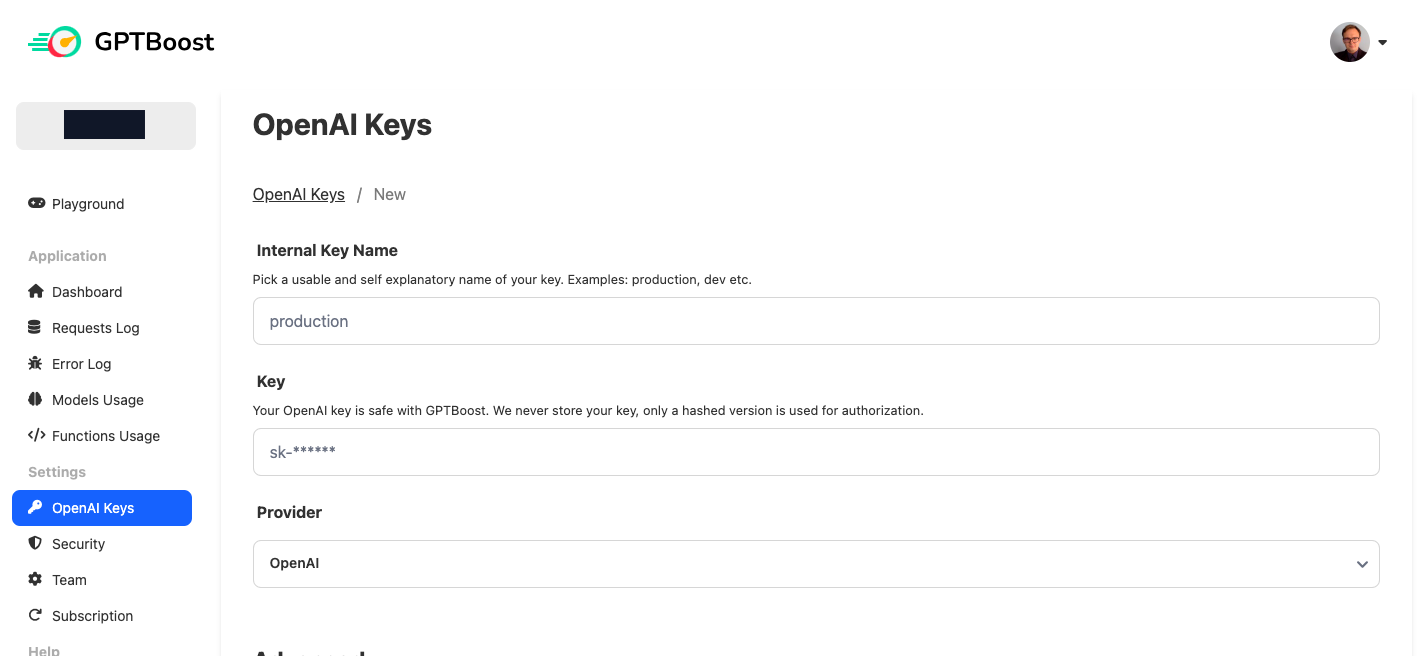

In the case of GPTBoost, we will need an OpenAI API key. We must give GPTBoost our key because the service works as a proxy between our code and OpenAI. GPTBoost will also use the key to assign the OpenAI API usage to our account.

After setting up the key, we must overwrite the API endpoint path with the GPTBoost proxy in the model client code.

import os

os.environ['LANGCHAIN_TRACING_V2'] = "true"

os.environ['LANGCHAIN_ENDPOINT']="https://api.smith.langchain.com"

os.environ['LANGCHAIN_API_KEY']="ls__..."

os.environ['LANGCHAIN_PROJECT']="ai-monitoring"

os.environ['OPENAI_API_KEY']="sk-..."

MODEL_ENDPOINT = "https://turbo.gptboost.io/v1"

Privacy and Security

It may not be obvious to everyone so let’s state it: a third-party tool will receive your prompts and AI responses. In the case of GPTBoost, you must also share your OpenAI API key. Ensure you can do that before using any of those solutions.

Tracking OpenAI Requests and Responses

In the first example, let’s make a simple call to the AI model using a Langchain prompt template and the OpenAI client. Note we have to override the URL in the client!

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

prompt = ChatPromptTemplate.from_template("Explain in simple German the word {word} and how to use it in a sentence.")

model = ChatOpenAI(model_name="gpt-3.5-turbo", openai_api_base=MODEL_ENDPOINT)

chain = prompt | model

chain.invoke({"word": "Bierdeckel"})

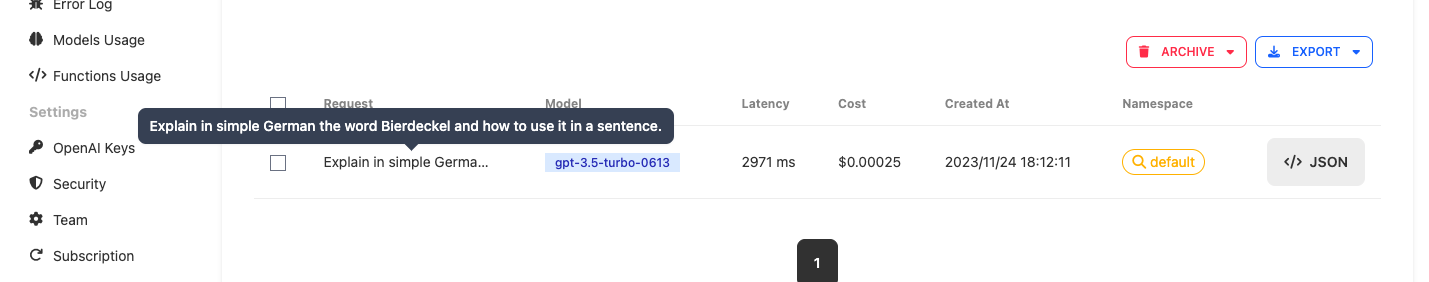

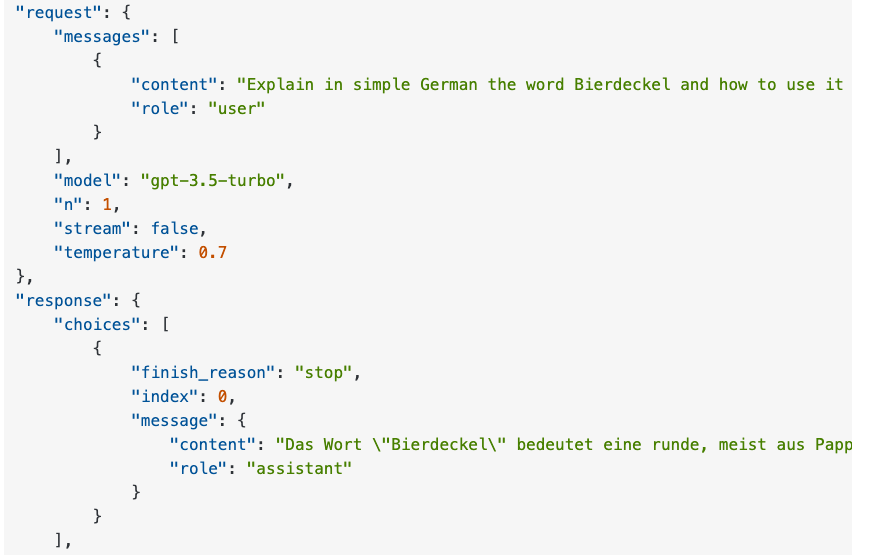

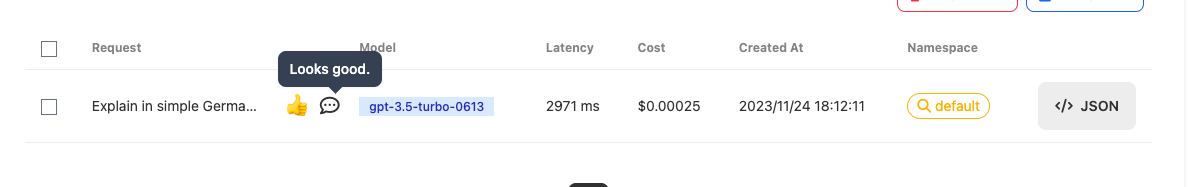

GPTBoost displays all requests in the table view where we can click and get the details of both request and response in JSON.

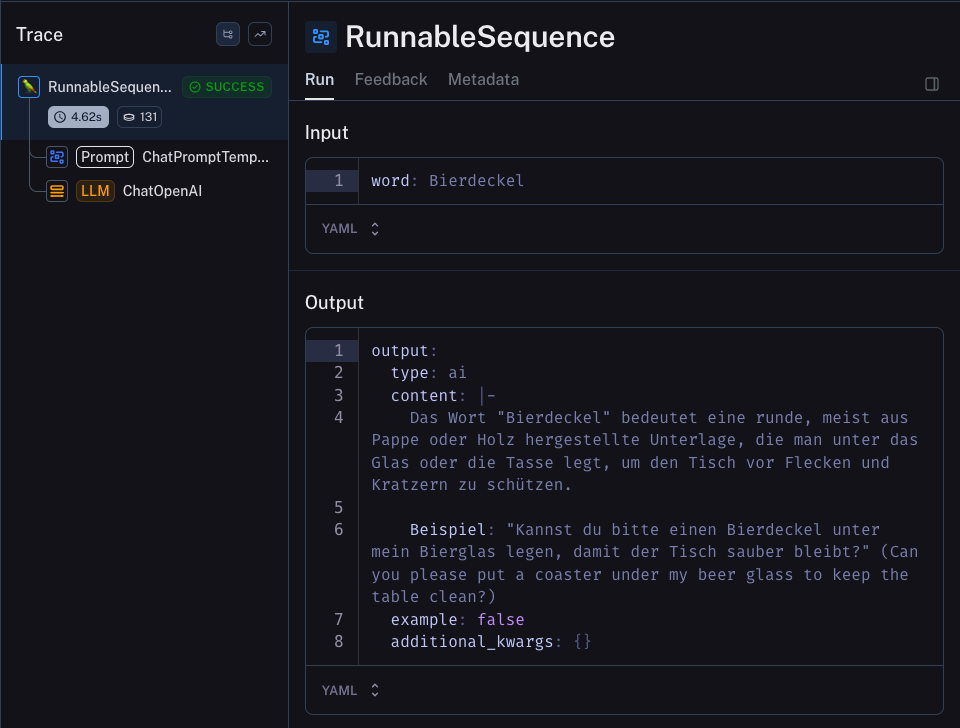

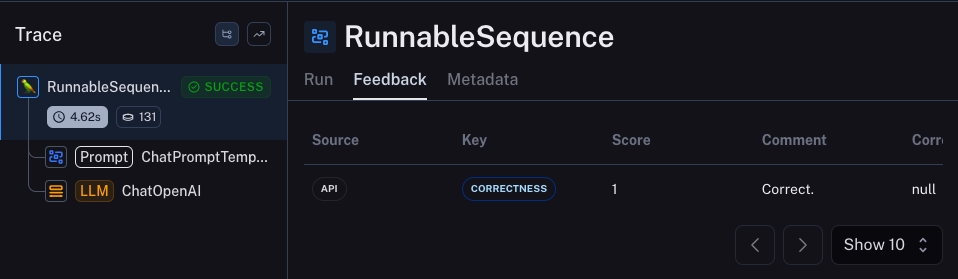

Langsmith offers a similar view, but because we use multiple Langchian objects, all steps of the model call (replacing placeholders in the template with the actual input and calling the API) are logged separately.

Feedback API

Both tools support gathering feedback regarding interactions with AI. We can use the feedback feature to mark requests that should be reviewed to improve the prompt, or we can use the feedback to gather data for fine-tuning of an open-source model later.

In Langsmith, we can add the feedback using the Langsmith client. The input will be later visible in the Feedback tab of the AI interaction log.

from langsmith import Client

client = Client()

# retrieves all calls

result = client.list_runs(

project_name="ai-monitoring",

)

# add feedback to all of the retrieved API calls

for run in result:

_id = run.id

feedback = client.create_feedback(

_id,

"Correctness",

score=1,

comment="Correct."

)

GPTBoost has a REST endpoint where we can interact with the messages and add feedback. To store the feedback, we need to know the response ID generated by OpenAI (the ID is a field in the response). Currently, there is no way to retrieve data from GPTBoost using an API so if you forgot to save the response ID, you will have to export the request list as a file and get those identifiers from a JSON file.

import requests

response_id = 'chatcmpl-...'

feedback_url = "https://api.gptboost.io/v1/feedback/"

data = {

"message_id": response_id,

"rating": 'positive',

"tags": ['correct', 'translated'],

"comment": 'Looks good.'

}

requests.post(feedback_url, json=data)

After storing the feedback, we will see it in two places: as an icon (with the comment) in the requests log and as an additional field in the request’s JSON (visible in the Requests Log):

Feedback in the JSON:

"feedback": {

"comment": "Looks good.",

"feedback_id": "chatcmpl-8OUwrg9qRk0JL2cqTTaIV7ujNTuTJ",

"rating": "positive",

"tags": [

"correct",

"translated"

]

},

When it comes to storing feedback, Langsmith and GPTBoost differ substantially. Langsmith allows us to keep multiple feedbacks for every interaction and displays them all in a list. GPTBoost allows only one feedback and sending additional requests will NOT override the previously set feedback value.

Tracking OpenAI Function Calling

Naturally, the most interesting part of tracking OpenAI interactions is logging the usage of functions.

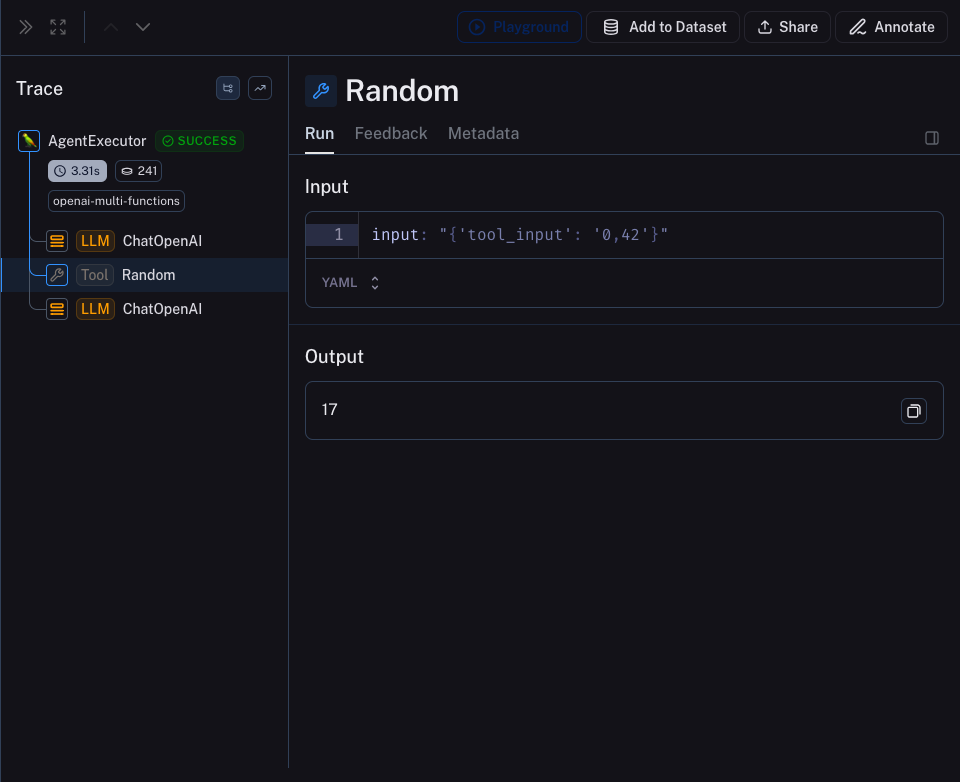

Langsmith allows us to log every AI Agent implementation, including interactions that don’t use OpenAI functions, and implement the ReAct, SelfAsk, or a different prompt engineering technique. We will use only the implementation based on the Function Calling feature to show both monitoring tools.

from langchain.agents import AgentType, Tool, initialize_agent

from langchain.chat_models import ChatOpenAI

import random

def generate_random_number(x):

[min, max] = x.split(',')

return random.randint(int(min), int(max))

tools = [

Tool(

name="Random",

func=generate_random_number,

description="Useful when you need to generate a random number between two numbers. Takes an input in the format \"min,max\", for example: \"0,10\" or \"10,100\"",

),

]

# the model variable contains the model client we configured earlier. The one with the overridden endpoint!

agent = initialize_agent(

tools, model, agent=AgentType.OPENAI_MULTI_FUNCTIONS, verbose=True

)

agent.run("Give me a random number between 0 and 42")

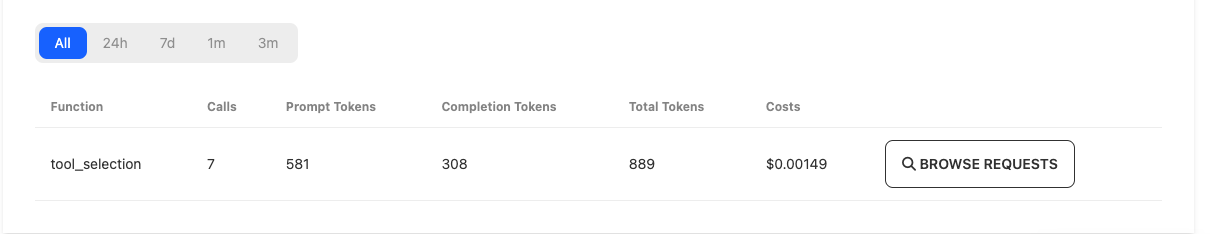

In both services, we see the interaction with the tool. However, Langchain controls the interaction, so it can provide more information. In the Langchain implementation, the interaction with OpenAI Function Calling is implemented as a single function called tool_selection with the function name and its parameters as the arguments.

Langsmith can understand the Langchain format while GPTBoost can tell us only that a tool_selection` function was called. Naturally, we would get a more detailed result if we interacted with OpenAI directly instead of using Langchain.

Langsmith vs GPTBoost - which one should you use?

Do we need both or is Langsmith good enough? If you use Langchain, you need both. Langsmith effortlessly tracks the interactions between Langchain components such as prompt templates, agents, and LLM clients.

However, Langsmith doesn’t monitor the cost of using OpenAI API. If you struggle to keep costs under control, you will need both tools.

Go From AI Janitor to AI Architect

Stop debugging unpredictable AI systems. I can help you build, measure, and deploy reliable, production-grade AI applications that don't hallucinate.

Message me on LinkedIn