For the last year, I consulted managers and entrepreneurs on using AI in their projects and businesses. I talked with bankers, manufacturers, and lawyers. I taught workshops for programmers about integrating AI with their software and using AI to speed up software development. I met teams trying to make their software products more interactive and user-friendly. I heard complaints and excused why AI won’t be a good solution in their case. I saw some mistakes, a few lost opportunities, and lots of indifference mixed with complacency. Here is what I learned.

Table of Contents

- Chatbots are not the killer feature of AI

- AI kills jobs only when it kills entire businesses

- Skeptics keep raising the bar

- People hate AI

- Hype creates ridiculously inflated expectations

- Most paid AI services can be replicated in 2 weeks by an average programmer

- Quick wins are overlooked

- How to use AI in business without losing your mind and money

Chatbots are not the killer feature of AI

The mass awareness of Generative AI started with ChatGPT. Consequently, for some time, we assumed chatbots would become the killer feature of AI. I wrote several articles showing how to build an AI-powered Slack bot, a Facebook bot, or a website on which you can talk with AI about the content of any YouTube video. All of those things work fine, but along the way, we discovered AI can do much more. Soon, people started building chatbots to talk with your Notion notes, documents, or phone call transcripts. It’s all impressive, but AI doesn’t end with chatbots. Chatbots are one of the AI features that seem great initially, but quickly, you discover their limitations.

Not only do chatbots tend to agree with everything their users say, but they will tell a lie to please the user. Good luck building a customer support chatbot like this. Of course, you can create the chatbot, and people may like using it, but would it be good for the business? Imagine getting a lawsuit because the chatbot promised something you cannot deliver.

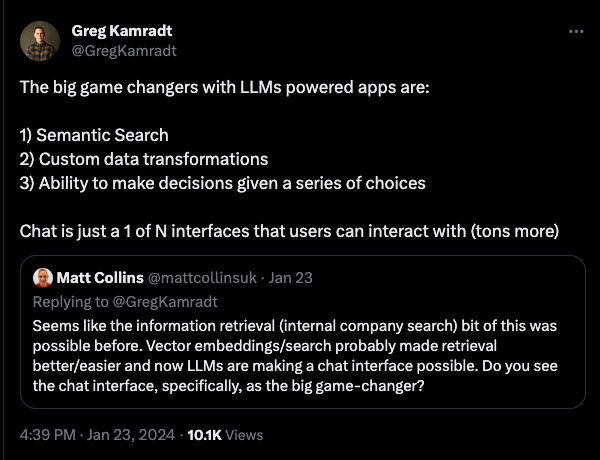

As Greg Kamradt says, “Chat is just a 1 of N interfaces that users can interact with (tons more).” After all, if AI is so intelligent, why do I even have to tell it what I want? Can’t AI anticipate what I would need and be proactive? If you use any AI-powered auto-completion, such as Github Copilot or even the sentence completion feature in Gmail, you know AI can do auto-completion, and it’s improving.

According to Greg, the actual game changers are semantic search, data transformation, and automated decision-making. Of course, the semantic search use cases we are currently building are based chiefly on inflated expectations. We hope that throwing a few gigabytes of PDFs into a vector database will make AI answer your questions correctly. It won’t happen unless we see yet another breakthrough in AI technology. Semantic search works best when we put lots of effort into data preprocessing. Both when we populate the vector database with data and while retrieving the answers.

If you ever heard of vector databases, you may know such databases search for a text similar to the query. We use vectors because we hope an answer to a question contains words (or their synonyms) similar to the question itself. It’s not always the case. Therefore, in the case of semantic search, we need to paraphrase the question (or sometimes, paraphrase the input data while storing it in the database, too), assuming multiple versions of the same query help us find more relevant information without unearthing too much noise. We may get a decent question-answering solution when we put AI on top of the vector database search unless your users intentionally try to break your AI. We will talk about that later.

Custom data transformations are all of the “Summarize,” “Find the next action,” or “Find quote” features. The text transformation capability works excellently in the current Generative AI models. Therefore, over the last year, hundreds of “Summarize” buttons were added to many applications. Most of them didn’t need such a feature at all. Even Todoist has an AI Assistant now. The assistant can break a task into subtasks. The assistant’s subtasks are overly verbose, based on some implicit assumptions hidden from the user, and the entire feature is too slow. I don’t find it helpful, but someone decided Todoist needs an AI Assistant. It’s probably because “everyone else has one.” I hope you have a better reason to use AI.

Decision-making AI is a controversial topic. As soon as you mention it, people come up with cliche examples of situations when AI can’t make the right decision, such as the Trolley problem or autonomous military drones. We are not talking about such high-stakes decisions.

For a start, we may use AI to route support emails to an appropriate team instead of making people read irrelevant emails and forward them to each other until they find someone who can solve the problem. AI may also suggest which product they may try upselling to the sales representative or even prepare an email with the offer. However, I recommend thinking twice, three times, or as many times as required until you decide it’s a terrible idea to send the email without getting the content approved by a competent human first.

AI kills jobs only when it kills entire businesses

We have seen news of companies firing employees and saying it happened because of AI. It’s a lie. AI became a convenient excuse when you want to fire a few hundred people to decrease costs and give the stakeholders higher bonuses. Previously, you had to hire external business consultants to provide an excuse. The layoffs weren’t your fault; the consultants told you to fire people. Now, we have AI, and we say AI replaced workers. Replaced them with what? Have you seen any of those automations? Are the remaining employees more productive?

Of course, some companies fire employees because of AI. Let’s think about the underlying reason. Are they firing them because the remaining people can double their efficiency with AI and don’t need such a large team anymore, or are they firing people because their entire business makes no sense in the world with AI?

Jasper used to be a tool for writing emails, articles, or notes with the help of AI. They used an early version of generative AI. When ChatGPT was released, Jasper had to rebrand to become an AI Copilot for marketing teams. Their previous business idea makes no sense anymore. Who would pay them for automated email writing when you can get better quality content from ChatGPT, even in its free version?

Duolingo fired some of its translators because AI can do their jobs. To be precise, Duolingo fired contractors who worked at companies providing translation services. Since AI can replace such services, the entire business model of translation service companies is in danger.

What about Duolingo? Does a fun but, frankly, inefficient method of language learning still make sense when we can have an AI-powered personal language tutor with whom we can talk about any topic and who will explain any grammar structure, providing as many examples as we need? Did Duolingo fire people to substitute them with AI, or did Duolingo fire people to survive a little bit longer in a world where AI replaced their service? Addictive gamification and a sense of progress (regardless of any actual language-skill improvements) may not be enough for them to make a profit.

Skeptics keep raising the bar

But AI keeps making mistakes! It hallucinates. The translations are not perfect. It writes articles with lots of fluff and lack of substance. Yes, but one year ago, AI couldn’t even do those tasks. AI may always make mistakes in translations, but bilingual people make such mistakes, too. AI may write more like Tom Wolfe than Ernest Hemingway, but some people prefer Wolfe over Hemingway.

The AI-skeptics keep AI to standards way higher than they would expect from people. You will always be able to find some imperfections. AI doesn’t need to be omniscient.

Unfortunately, when I taught workshops for legal departments, the lawyers focused on the mistakes. I guess it’s natural for them. Lawyers should be nitpicking. AI may miss the nuance of adding or removing a comma in a sentence and the tremendous legal consequences of such an edit. However, with the proper data preparation, AI should be able to find laws relevant to a specific case, past court verdicts, or loopholes used by lawyers in the past.

Similarly, managers tend to dismiss AI’s decision-making capability because they imagine it means the AI will make strategic decisions or tell them how to run the company. When they focus on such high-level expectations, managers overlook the small daily choices they must make. What notes do you need to read before the next meeting? Who promised you a follow-up, and on what? (in this case, a truly useful AI would remind the person who promised the follow-up, not the manager waiting for it) Or even decisions like whether we should call the client in the morning or the afternoon? (Do you really need to remember whether you close more sales calling early or whether the person you call has more time in the afternoons?) All of those look trivial, but calling at the wrong time or being unprepared for a meeting may be costly mistakes. How could you spend your time and energy if you didn’t need to think about such details?

Skeptics are everywhere. Programmers should be enthusiastic about AI, right? In every workshop, I met someone expecting to get perfect code from AI every time. The programmer couldn’t express their expectations clearly in writing and anticipated AI would guess what needs to be done. The same programmer wouldn’t say StackOverflow (a forum for programmers to ask specific questions and vote on which answer is correct) is useless if the answer isn’t exactly what they need.

Let’s focus more on AI-assisted work than on work being replaced by AI. I will show you some ideas in the later part of this article.

People hate AI

When you build a chatbot and give access to the public, I’m sure two things will happen. First, people will try to use your chatbot as a free ChatGPT. Free for them. You are going to pay for all of those requests. Second, someone will attempt to break your AI by making the chatbot say something inappropriate or incorrect, just like someone did when DPD released a customer service chatbot. According to Sky News, someone made the bot swear and call DPD the worst delivery service. The company had to close the chatbot.

It all sounds terrible, but you can make it even worse by instructing your chatbot to pretend it is a real person. Being a fake never works in customer support. Do you think people trust companies that outsource their call centers to India or Poland but tell their employees to use Americanised names? Does Santosh pretending to be Matthew or Katarzyna playing the role of Sarah seem convincing?

I’m convinced the widespread use of AI chatbots for customer support is terrible. Every company that makes customer support an unpleasant experience has forgotten that its clients generate 100% of the company’s profit. You can force people to use your chatbot by providing no other option, but your competition, which still lets customers talk with real people, will gain a competitive advantage for free.

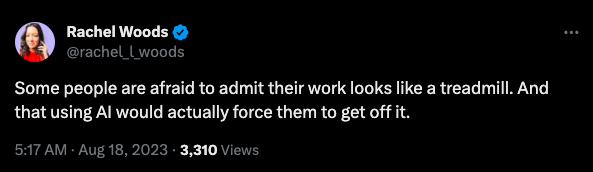

So, instead of publicizing the chatbot or any other AI system and letting it inevitably get vandalized, we could build internal AI tools. Will this make employees happy? I’m not sure.

Do people want to be more efficient at work? According to Talentism, “In a pre-pandemic study, it appeared that laziness was endemic in the workforce. 75% of respondents admitted they weren’t working to the best of their ability even once a week.” Talentism says, it happens primarily because of inefficiency in internal processes, not actual laziness. But how often do you see people fighting with inefficiency? In a recent study, Gallup found that “U.S. employee engagement took another step backward during the second quarter of 2022, with the proportion of engaged workers remaining at 32% but the proportion of actively disengaged increasing to 18%. The ratio of engaged to actively disengaged employees is now 1.8 to 1, the lowest in almost a decade.”

On the one hand, at least in some cases, people are “disengaged from work” because employees are bored with their tasks, and letting AI do at least some part of the work could increase the team’s job satisfaction and productivity. On the other hand, I imagine there are people whose job is to copy information from incoming emails into a CRM, and they don’t want any other work because such a task lets them daydream, browse the Internet, gossip with coworkers, etc. Those people would be furious if you suggested automating their work with AI.

What can you do? Don’t make your AI transformation a surprise, and don’t automate too many things at once. Start with the most annoying tasks. Nobody is going to protest if you automate something they hate. Afterward, move on to the next most annoying task. What about the people who happily do menial work? Make them responsible for supervising AI automation related to something outside of their everyday tasks. Let them get used to working alongside AI.

Hype creates ridiculously inflated expectations

We did an excellent job marketing AI. Now, people expect to be able to drop all their documents into a database or directly in the prompt and have AI answer all questions perfectly. As I said, AI doesn’t need to be perfect - it may never be perfect.

In my article on solving business problems with AI, I compared AI to a person sitting in an empty room with a pen and paper. The person in the room receives printouts with the necessary information and a piece of paper with the instructions. If they need something calculated, found in archives, or filled for later use, they must write the task down and hand it to someone else. That’s your AI. When solving a problem with AI, think of how such a person could solve the problem using only pen and paper. The person and AI can use external tools (databases, Internet access, running code) but have to write the instructions first and hand them over to someone capable of understanding them. If you can conceive a problem-solving process that may be effective in such a limiting setup, you may be ready to use AI, and there is a chance you will get good results. On the other hand, if you can’t imagine how a person equipped with only a pan and paper could do what you need, AI will most likely fail, too.

Also, remember that interactions with external systems are always error-prone, and too many of them will deteriorate AI’s answers. Suppose your idea requires using AI as a coordinator, asking for information from software, passing tasks to people, and checking whether they have finished them. In that case, you either need to use AI separately for every step without having a global coordinator or simplify the process.

Be aware of the tasks AI is most likely to fail. Instructions with negations are more likely to be misunderstood than instructions phrased as positive statements. If you want to remove some behavior, say “Avoid doing X” instead of “Don’t do X.” The problem of ignoring negations may be less common in GPT-4, but earlier versions and open-source models often require rewording the prompt. In the case of graphic-generating models, it’s best to avoid mentioning what you don’t want.

Last but not least, AI would rather hallucinate and give you utter nonsense than say it doesn’t know something or is not capable of doing the task you want. We all know people like this and understand how to act around them. We need the same skills for AI. When you use AI to find a relevant quote in the article, give it a couple of examples of text with and without relevant information and the answer you expect in all those cases. This prompting technique is called in-context learning, and depending on the number of examples, you may say it’s one-shot (one example) or few-show (2+) in-context learning. Providing examples of what to do with missing data shows AI that returning “Not enough information” is one of the acceptable options.

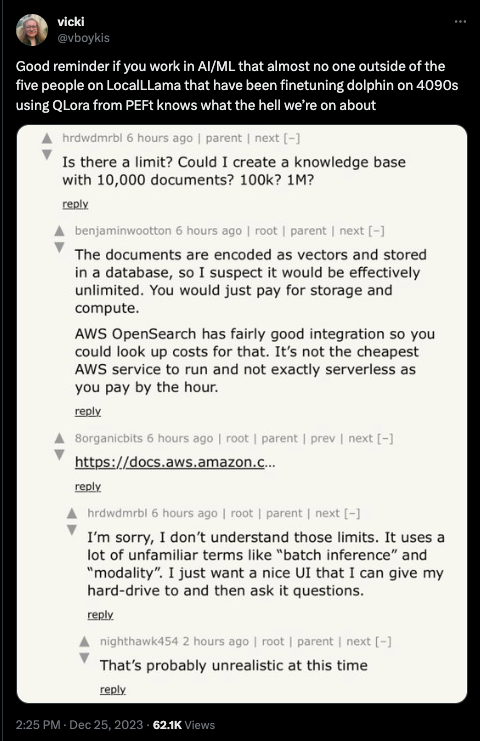

Most paid AI services can be replicated in 2 weeks by an average programmer

One week if the programmer uses AI to write code.

Many AI-based services developed last year are simple wrappers around the OpenAI API. Those applications add a few instructions to the prompt and, sometimes, transform the output. And that’s ok! We are at an early stage of using Generative AI. The applications we build right now are the equivalent of early iPhone or Android apps. The early app stores were full of alarm clocks, timers, flashlights, calendars, and other simple applications replicating built-in functions of the mobile OS with some tiny customization. AI-based applications are at a similar stage right now.

What does it mean for your business? I think it means two things. First, you can’t expect any of those applications to exist in a year because the developers may decide to move on to something else, or running the application will cost way more than they can ever earn. Second, you can build your AI-based applications because the current implementations are still relatively simple. Don’t get me wrong. The underlying technology is super complex, but you don’t have to understand it when all you do is put some pre-built elements together and write code to make it work. The programmer you have already hired can do it, or if you don’t have a programmer on your team, you can hire a consultant to build the automation for you. The most difficult and time-consuming task will be making the website look nice (if you want to interact with AI using a website).

In many cases, you don’t even need a programmer. No-code automation platforms such as Zappier, Make.com, or Automatish can interact with OpenAI, too. For example, I have built a Make.com pipeline to find relevant information in many newsletters I subscribe to and send a summary directly to my Kindle, so I don’t have to delve into emails.

It may seem complicated, but you configure the pipeline by moving around icons displayed on a website without writing any code. Still, you don’t have to do everything yourself. Why don’t you delegate the task to your employees or hire a freelancer responsible for keeping the AI automation running and updating them when you need changes?

Quick wins are overlooked

I told you we should look for opportunities to create AI solutions that assist us while we work, not replace us. What can we do quickly and cheaply?

Do your sales representatives spend time writing down phone call summaries and adding them to your CRM? Why? Most, if not all, video conference software has a transcription feature now, or you can install a plugin. Why don’t you send a transcript of the call to AI and get the summary automatically added to the CRM? If the salespeople save 30 minutes daily, they can have one more call. How many salespeople do you have on your team? How many more prospects can they call in a month if everyone has time for one more call daily?

Does your support team look for similar support cases in the past to find a proven solution instead of trying to figure it out from scratch? Do you know AI can understand the text in the problem description provided by your client and find past support issues similar to the one at hand? After that, AI can generate a step-by-step instruction based on the past answers provided by your support team. Would such automation speed up the work? Would the client be happy if they received a helpful response sooner? Would they buy from you more often?

Greg Kamradt’s newsletter mentioned a conversation with Rex Harris, Chief Product Officer at Bryte, who said they are using the Claude 2.1 AI model to extract information from surveys. They are uploading 100s of survey responses into AI and told AI to pull out themes and correlations that span multiple responses. According to the newsletter, the automation saves 20 hours of work per one survey.

What else? To find ideas, look for tasks that require looking for information in large amounts or relatively short texts but multiple times a day. Even the automated email forwarding I mentioned earlier may save you some time. It may be only one minute per message, but how many such messages must be forwarded monthly?

How to use AI in business without losing your mind and money

Start small. Automate simple, recurring tasks and focus on the cumulative time saved. Don’t spend a month analyzing the available options; instead, focus on learning by doing.

Keep humans in the loop. Don’t let any irreversible action happen without human approval. It’s ok to automatically store AI-generated information in a CRM or database if someone has to look at it before you use it for decision-making. If you let AI make a decision, don’t make it final until a person competent enough to judge the decision sees the AI’s choice. Never let AI talk to your customers directly. It’s not ready for it yet. AI can generate an email draft but doesn’t send the message until someone approves the content.

When preparing AI data, don’t just throw every file you can find into the prompt or a database. Clean the data first by removing irrelevant information and being deliberate about the data you give AI or store in databases used by AI.

Most importantly, keep calm. We are still early. Your competition is not leaving you behind. Everyone who says so is trying to sell you an overpriced online course.

If you want inspiration, read my other articles or message me. I’m happy to help.

Go From AI Janitor to AI Architect

Stop debugging unpredictable AI systems. I can help you build, measure, and deploy reliable, production-grade AI applications that don't hallucinate.

Message me on LinkedIn