GPT-3 and ChatGPT cannot access the Internet, right? What if they could? What if I told you we could connect GPT-3 to the Internet, let it search for websites in Google, and download their content?

Table of Contents

- How to create a Slack bot

- Connecting GPT-3 to the Internet

- Implementing a custom tool for downloading websites in Langchain

- How to use it

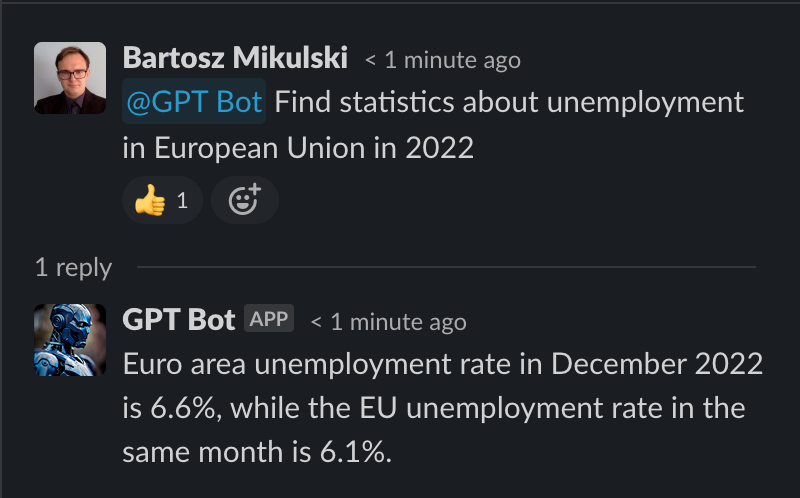

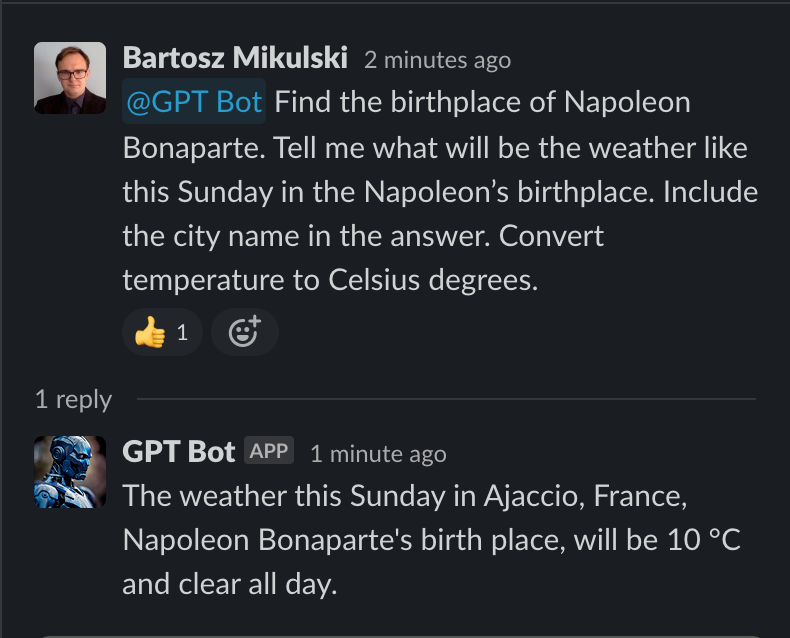

Similarly to my previous article about extending GPT-3 capabilities, we will build a Slack bot and use the Langchain library to let it perform actions in “the outside world.” When we finish, we will have a Slack bot that can do a simple web search (first screenshot) and even a short, multistep search (second screenshot).

How to create a Slack bot

I decided not to repeat the same instructions. You can find a step-by-step guide in the previous article. I used the same Slack-related code this time and changed only the AI part. Hence, let’s focus on AI.

Connecting GPT-3 to the Internet

We will need the Langchain library and Langchain agents. Agents and their tools are implementations of the actions that our AI model can perform. Langchain has some already implemented, but we can also add custom tools. Our bot will need three: one for web search, one for extracting text from websites, and one for performing calculations and unit conversions.

First, we import the required dependencies:

from langchain.agents import Tool

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from extractor_api import ExtractorAPI

ExtractorAPI doesn’t exist yet. We will implement it in the next step.

Now, we can create a new class and instantiate all required objects in its __init__ method. We start with the GPT-3 implementation. Our bot will use the OpenAI API to access a GPT-3 model:

class AI:

def __init__(self):

self.llm = OpenAI(temperature=0.9)

Remember to set the OPENAI_API_KEY environment variable (or pass the API key as a parameter of the OpenAI constructor).

We need to tell the model what it does and what we want. We can do it by using a PromptTemplate. A PromptTemplate adds the text we send from Slack to a template which may instruct GPT-3 how it is supposed to answer questions, what it shouldn’t say, etc. We add the following line in the __init__ method:

self.prompt = PromptTemplate(

input_variables=["query"],

template="""

You are a personal assistant. Your job is to find the best answer to the questions asked.

###

{query}

""",

)

In the next step, we load two tools from Langchain. We will use SERP API to search in Google and Wolfram Alpha API to run calculations. Both tools need API keys in environment variables (or we have to explicitly instantiate their objects and pass an API key to the constructor): SERPAPI_API_KEY and WOLFRAM_ALPHA_APPID.

self.tools = load_tools(["serpapi", "wolfram-alpha"], llm=self.llm)

We need one more tool, but it’s not implemented in Langchain. Therefore, we create a custom Tool. A tool requires a name which will be the action name used by GPT-3 to refer to it. For best results, keep it short but descriptive. We need a function that implements the tool action and a description. In the description, we must explain what the tool does and what is its expected input and output. The description is not for people reading your code! It tells GPT-3 how to use the tool. If GPT-3 has trouble using the tool (passes input in the wrong format or doesn’t use it), we need to tweak the description.

self.tools.append(

Tool(

name="extractorapi",

func=ExtractorAPI().extract_from_url,

description="Extracts text from a website. The input must be a valid URL to the website. In the output, you will get the text content. Example input: https://openai.com/blog/openai-and-microsoft-extend-partnership/",

)

)

Finally, we finish the setup part by creating an instance of the Langchain agent with the tools we prepared:

self.agent = initialize_agent(

self.tools, self.llm,

agent="zero-shot-react-description", verbose=True, max_iterations=10

)

Langchain offers several agent implementations. We use zero-shot-react-description because it’s an agent implementation that supports any number of tools and uses their description to figure out which tool it should use. The verbose=True parameter lets us observe the agent’s “thinking process” in the server’s logs. max_iterations limits OpenAI API usage if the agent gets stuck. Without it, the agent would keep trying until it exceeds the limit of tokens in a single OpenAI request or spends the entire budget of your OpenAI account.

In the end, we create the run method that initiates the chain of GPT-3 interactions and returns the final answer:

def run(self, query):

agent_prompt = self.prompt.format(query=query)

return self.agent.run(agent_prompt)

Implementing a custom tool for downloading websites in Langchain

We need one more thing — the ExtractorAPI implementation. In this class, we call the extractorapi.com (we need an API key, too) and get the text content of a page. Note that I pass the error as a text response. GPT-3 will receive it and may correct itself when the model tries to access an invalid URL.

import os

import requests

class ExtractorAPI:

def __init__(self):

self.endpoint = "https://extractorapi.com/api/v1/extractor"

self.api_key = os.environ.get("EXTRACTOR_API_KEY")

def extract_from_url(self, url):

try:

params = {

"apikey": self.api_key,

"url": url

}

r = requests.get(self.endpoint, params=params)

r = r.json()

return r["text"]

except Exception as e:

return f"Error: {e}. Is the URL valid?"

How to use it

That’s all you need. You can run in Python code by creating an instance of the AI class and calling the run method:

ai = AI()

print(ai.run("Find statistics about unemployment in European Union in 2022"))

If you prefer a Slack bot, go back to my previous article about creating a Slack bot with GPT-3 and scroll to the Slack bot section.

Go From AI Janitor to AI Architect

Stop debugging unpredictable AI systems. I can help you build, measure, and deploy reliable, production-grade AI applications that don't hallucinate.

Message me on LinkedIn