PydanticAI is an agentic framework for building LLM applications. However, nothing stops us from using PydanticAI to build RAG applications. The main difference between RAG and AI Agents is that RAG uses a hard-coded workflow where we first retrieve the data from a data store and then use an LLM to generate a response. Agents are more flexible. The LLM can choose a tool from the provided list, and the flow of interactions is dynamic.

Table of Contents

- What Are We Building?

- Preparing the Database and the Ground Truth data

- Retrieval with HyDE

- Response Generation

- Complete RAG workflow

- Related Articles

When we build a RAG application, we have at least two components: retrieval and generation. We have to evaluate the performance of both components separately. And, in my opinion, retrieval is more important. If you make a mistake there, the performance of the rest of the application is irrelevant.

What Are We Building?

We will build an application to answer users’ questions using documents stored in a vector database.

In the retrieval step, we will use the HyDE technique (Hypothetical Document Embeddings) to generate an answer to the user’s question without using any documents. The answer will help us find the most relevant data in the vector database. Instead of looking for documents similar to the question, we will look for documents similar to the answer. Even if the generated answer isn’t correct, at least it should contain the same words as the actual answer. (To learn more about advanced RAG techniques, read Enhancing RAG System Accuracy - Advanced RAG techniques explained)

The next step is to generate a response to the user’s question using the retrieved data as a context.

RAG Performance Evaluation

We will use the Ragas library to evaluate the performance of both steps. In the retrieval step, we count the precision and recall metrics to determine if we retrieved the relevant data. In the generation step, we use the faithfulness metric to determine if the generated response is based on the provided context.

Request Tracking and Observability

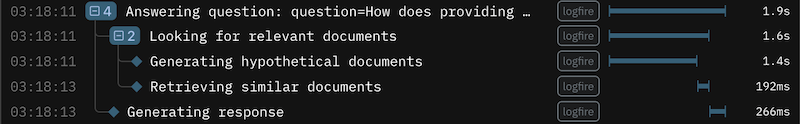

All of our code will be wrapped in the Firelog library. Firelog will log the input parameters and the duration of every step in our workflow. In the Firelog dashboard, we will be able to see the application’s performance over time and debug any issues.

Preparing the Database and the Ground Truth data

We will need a vector database containing the documents. Additionally, we need a list of questions. We need to know which documents we expect to be retrieved for each question. For brevity, I will not include the code here, but in the following code snippets, we will assume we have a variable collection pointing to a collection in the ChromaDB vector database. Also, we assume we have a questions_and_answers variable containing a tuple with the question, document ID, document metadata, and the document content.

Additionally, we have the OpenAI API key and Logfire API key set as environment variables.

Retrieval with HyDE

As mentioned earlier, we use HyDE to generate a (most likely wrong) answer, hoping the answer is similar to the actual answer stored in the vector database. We will encapsulate the retrieval logic in the find_relevant_documents function. The function is wrapped in the logfire.instrument decorator to log the duration of the function and the input parameters. Inside the function, we use the logfire.span decorator to log the duration of the inner steps.

from pydantic_ai import Agent, RunContext

@logfire.instrument('Looking for relevant documents')

def find_relevant_documents(question, collection, documents_to_find=1):

with logfire.span('Generating hypothetical documents'):

hyde_agent = Agent(

'openai:gpt-4o-mini',

result_type=str,

system_prompt=(

"Write an answer to the user's question. Write a single paragraph."

),

)

hyde_answer = hyde_agent.run_sync(question)

with logfire.span('Retrieving similar documents'):

documents_found = collection.query(

query_texts=[hyde_answer.data],

n_results=documents_to_find,

)

return documents_found['ids'][0], documents_found['documents'][0]

Retrieval Evaluation

We will use the Ragas library to evaluate the performance of the retrieval step. First, we got to call the function using our test data and create an EvaluationDataset.

from ragas import evaluate, EvaluationDataset

from ragas.dataset_schema import SingleTurnSample

evaluation_dataset = []

for (question, doc_id, title, body) in questions_and_answers:

retrieved_ids, retrieved_docs = find_relevant_documents(question, collection)

sample = SingleTurnSample(

retrieved_contexts=[retrieved_ids[0]],

reference_contexts=[doc_id]

)

evaluation_dataset.append(sample)

evaluation_dataset = EvaluationDataset(samples=evaluation_dataset)

Now, we can configure the metrics we want to calculate and pass them to the evaluate function. If you are interested in the available metrics, read Ragas Evaluation: In-Depth Insights published by Pixion.

from ragas.metrics import NonLLMContextPrecisionWithReference, NonLLMContextRecall

eval_result = evaluate(

dataset=evaluation_dataset,

metrics=[NonLLMContextPrecisionWithReference(), NonLLMContextRecall()],

)

eval_result

In my case, I got: {'non_llm_context_precision_with_reference': 0.9000, 'non_llm_context_recall': 0.9000}, which is good enough for a tutorial. I have seen production systems with worse results. If you have such a problem, check out my Advanced RAG article or message me. I will help you improve the performance of your RAG system.

Response Generation

When we have the relevant documents, we can use them to generate a response to the user’s question. Let’s implement the answer_question function to create a context from the documents and pass the context to the LLM. As before, we wrap the function in the logfire.instrument decorator to get tracing data.

@logfire.instrument('Generating response')

def answer_question(question, relevant_documents):

context = [f"<doc>{txt}</doc>" for txt in relevant_documents]

context = "\n".join(context)

context = f"<documents>{context}</documents>"

answering_agent = Agent(

'openai:gpt-4o-mini',

result_type=str,

system_prompt=(

f"Answer the user's question using only the information provided below. If the given text doesn't contain an answer, say 'I don't know'. \n\n{context}"

),

)

answer = answering_agent.run_sync(question)

return answer.data

Faithfulness Evaluation

We want to check if the generated response is based on the provided context. We will use the Ragas library to evaluate the performance of the generation step. This time, we will use the Faithfulness metric and test the answer_question function.

from ragas.metrics import Faithfulness

evaluation_dataset = []

for (question, doc_id, title, body) in questions_and_answers:

retrieved_docs = [body]

answer = answer_question(question, retrieved_docs)

sample = SingleTurnSample(

user_input=question,

response=answer,

retrieved_contexts=retrieved_docs

)

evaluation_dataset.append(sample)

evaluation_dataset = EvaluationDataset(samples=evaluation_dataset)

eval_result = evaluate(

dataset=evaluation_dataset,

metrics=[Faithfulness()],

)

eval_result

Complete RAG workflow

Finally, we can combine all the steps and create a complete RAG workflow.

@logfire.instrument('Answering question: {question=}')

def rag_with_sources(question, collection):

retrieved_ids, retrieved_docs = find_relevant_documents(question, collection)

answer = answer_question(question, retrieved_docs)

return answer, (retrieved_ids, retrieved_docs)

The LogFire decorator will log the question as a part of the trace name so we can easily find the relevant trace in the dashboard. My RAG implementation returns the retrieved documents with the answer because, in many RAG systems, we want to display the source data in the UI.

Related Articles

- Enhancing RAG System Accuracy - Advanced RAG techniques explained

- Measuring performance of AI agents with Ragas

- RAG with structured output using PydanticAI

- How to prevent LLM hallucinations from reaching the users in RAG systems

Alternative tracking solution using Langfuse:

Go From AI Janitor to AI Architect

Stop debugging unpredictable AI systems. I can help you build, measure, and deploy reliable, production-grade AI applications that don't hallucinate.

Message me on LinkedIn